While you might not consider ethics in AI a primary concern for your business, consider…

The data science use-case; misunderstandings and misconceptions

Data To Dollars is a Knowledge Network, Coaching and Management Consulting Firm which specializes in helping organizations create and manage their data monetization programs.

Building a data-driven organization is one of the essential stepping stones towards data monetization use cases and many other advanced revenue generating and competitive edge use cases. What are the building blocks? What is best practice? Data To Dollars frequently invites thought leaders to share their reflections on our new blog.

The data science use-case; misunderstandings and misconceptions

“We want to predict -X-.” Fill in any desirable topic in the place of X and you have the stereotypical formula of a use-case in the way many organizations currently think about analytics use-cases. In order of increasing probability of success of such use-cases: X equals the winning lottery number, the future price of crude oil, windows falling from high-rise buildings (no, that isn’t a joke – it’s an actual use-case) and finally, a customer buying a mortgage.

This blog will consider the importance of the use-case identification. The word ‘identification’ here hints at the effort involved. Identifying a use-case is similar to doing a reconstruction of your house: rather than just dreaming up any random extension to your home, you will likely take great pains to consider your desired outcome – its sizing, materials, resources and its fit with the existing property – which will all eventually lead to a very detailed plan, with bill of material, costs, timeline etc…

Good, now, let’s consider an example where the use-case is taken too lightly.

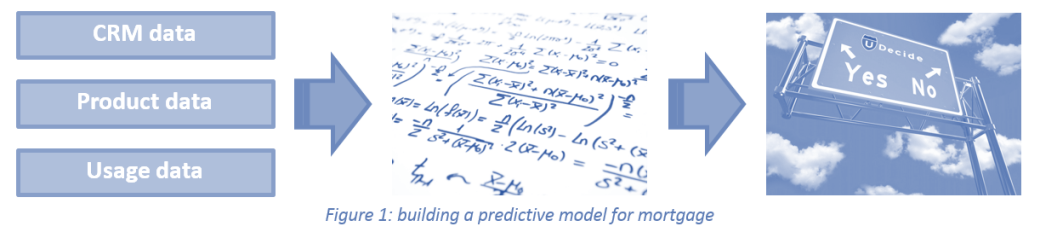

An Australian bank wants to predict which customers are likely to buy a mortgage.

This sounds like a reasonable use-case, right? Upon discussion with the bank, they already had a pre-conceived idea. The use-case already seemed decided on: predict the mortgage take-up. Next, given the recent hype on deep learning, the bank had set their eyes on neural networks of some kind. ‘Traditional’ machine learning simply would not do: another take it or leave it. Data sources were provided as indicated in Figure 1. Finally, the question of how the model was intended to be used, was deemed irrelevant: that was a case for marketing. After all, with a success criterion of model accuracy set to 80%, a model is a model and leads to predictions; marketing knows how to deal with lead lists.

So, the use-case is done. Time to bring in the guy with the sexiest job of the 21st century1. In a previous conversation, he had muttered something about ‘Dueling deep double Q-learning’ and ‘Dilated pooling layer highway networks’, so the bank was highly convinced of his magic.

The data

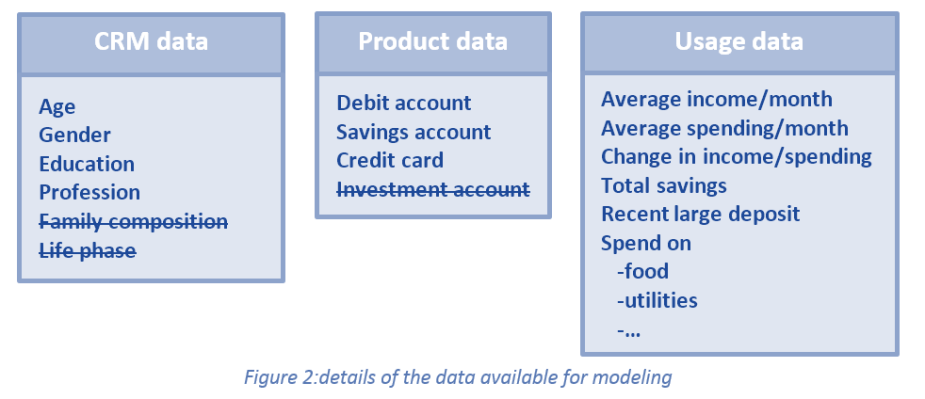

At first sight, the modeling data (Figure 2) looked rich. However, under inspection, it suggests some shortcoming.

First, the CRM data lacked deeper insights in the customer, such as family composition or description of life phases, the available data was clean and complete.

For product data, the bank had gone through a merger: a retail bank and a private investment bank had merged and the customers of both parties had not yet been mapped and hence, from a retail perspective, any investment account, including investment behavior, was invisible for modeling.

For usage data, there were the high-level indices such in-and outgoing flow and the change thereof. A recent project had developed event based marketing signals, and as such, detections of large deposits and alike were also available.

Finally, to better understand customer behavior, the bank had gone through an effort to classify transactions into high-level categories such as food and utilities.

The model and its true face

The model was built and the company was delighted to learn that the model accuracy was 94.7%. Hail the data scientist! The lead list was generated, and sent to the call center! All were eager for the results.

After a few days of calling, disappointment started to kick in: maybe they had sent in the wrong list since none of the customers was even considering a mortgage at the time!

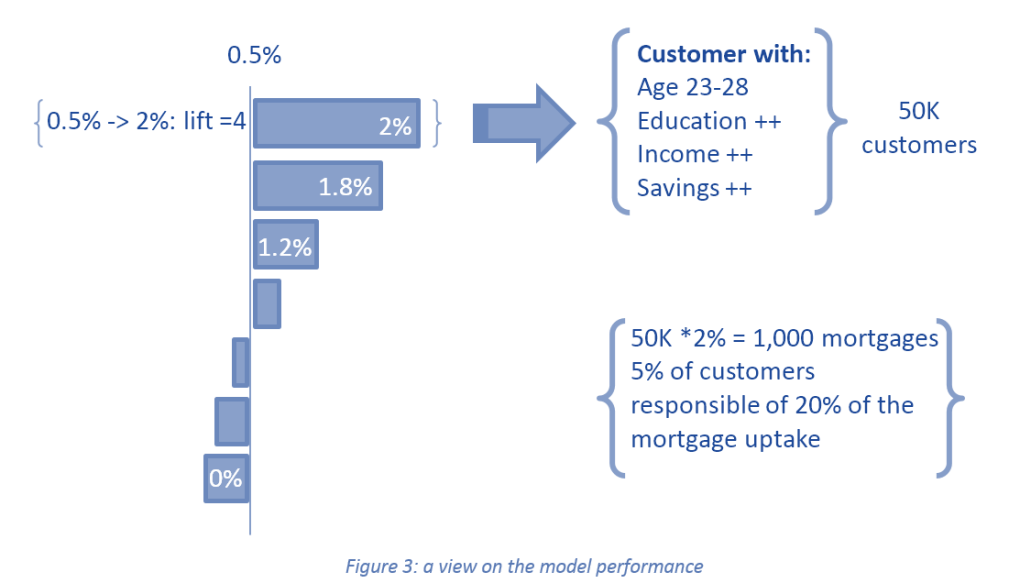

A post-mortem was conducted. The list was found to be correct. Plus, the data scientist has identified a cut-off point for the propensities, finding a lift of 4 for the top 5% of customers, a number not uncommon for many marketing models.

To deeper understand the model performance, let’s review the broader picture. The bank has a million customers and sells 5,000 mortgages per year. This results in a 0.5% take rate. If a model has a lift of 4 @5%, it means that for the 5% customers with the highest propensities, the take-rate is 2%. In other words: the model is 98% wrong for that subset. This is exactly what they experienced in the call center.

Inspecting the 5% target group showed that the model identified all customers who were indeed feasible to take a mortgage: those customers in their mid-twenties, with enough financial means and social/economical background to settle. Yet, this was not at all what the bank was interested in: they were looking to identify customers who are on the verge of buying a mortgage, so they can do a timely offer.

Another important aspect was uncovered: the model was not very dynamic. Scoring this model monthly would yield the same customers repeatedly. Indeed, how often do you get in and out the age range of 23-28, or how often do your savings significantly change?

What Fails? The Analytics or The Use-Case?

It’s well-known that model accuracy is a misleading measure, and while the data scientist looked at the correct model performance measures (AUC: 0.57, F1: 0.04), the bank only focused on the accuracy as success measure, which they had set at 80% (and indeed, without properly understanding the implication of the numerical recipe of accuracy) and they considered the 94.7% model accuracy as a go signal.

The real issue is two-fold: firstly, it was never open for discussion how the predictions were going to be used and secondly, it was never considered if the available data could give rise to predictions of a required accuracy.

The data question

The two points above go hand in hand, but starting with the data: a model can only ever be as accurate as the structure in the data dictates, whether you use ‘simple’ models or deep learning model. In the latter case, you even run the risk of being blinded by technicalities rather than the business implications of the prediction. Or maybe to phrase it more ironically:

An extra-ordinary model requires extra-ordinary data

Models are not magic, nor does the data obscure endless secrets. Here are a few questions one can ask to determine if modeling could yield a model with a desired level of accuracy:

- What are conceivable relations between the predictor data and the target?

- How many customers have the same values of the predictor data and are the values dynamic?

- If you have an expert manually inspect customer data records, can they indicate which customers are likely to buy soon?

Answering those questions for the mortgage case:

- Indeed, the model captured mortgage feasibility. If you have enough income or savings, then you may buy a house, or not: if your income is too low, you can’t buy a house (ok, don’t think NINO – subprime lending). Also, being in a certain age range makes you more likely to buy a house. However, those indicators won’t provide a high-quality signal. This is where you see the usual (model) lifts of 3-6.

- Many customers have a similar combination of age range and income (and even banking products and spending habits). Yet, they are not all buying a house and hence, a model is limited to modeling (or ‘finding’) the average effect of the group. Also, as mentioned in the previous section, the dynamic nature of the data will indicate how quickly the likelihood to buy a mortgage increases or decreases. If the most important predictors are age and income, then month after month, the model will yield the same list of customers, and by the simple fact that customers buy a mortgage every 10 years, the model is bound to have low accuracy.

- An expert who manually investigated the data, identified feasibility, however, by no means any strong signals. This once more is an indication that the resulting model will not be highly accurate.

The expert had more to say: if people get married, they don’t have a mortgage yet and they are in the feasible category, then they are much more likely to be looking for a house. Same holds for customers living in a small house that just had their first born. Another tip: if people do not extend their annual television cable contracts, it might indicate they are planning to move. Or what about customers subscribing to a realtor magazine?

It’s well worth listening to those stories and subsequently reviewing your thoughts to observe those things in data. Are people getting married? Well, your financial transactions indicate a huge marriage signature, given the costs and the enormous amount of arrangements one must make. Think of the single transaction in the jewelry store that even signals the upcoming proposal -your bank knows it earlier than your fiancée. Similar mechanisms can be considered for children on the way, or even subscriptions to magazines. Within the limits of the laws of privacy, those signals can provide an invaluable understanding of customer life and hence, the proper alignment with financial products.

Don’t throw out the low-accuracy model; learn how to use it.

The previous section addresses how to select the data; this paragraph focuses on understanding how to use the model, given its accuracy.

The simplest rule is: understand how well you do today, and if a model does better, use the model, keep the action mechanism constant. For example, if today your campaign responses are 1%, a (silly) predictive model that achieves a 2% campaign response, doubles the effectiveness of your campaigns. However, few people would cheer over a model that is 98% of the time wrong.

Digging deeper into this: like treating customers according to their value, one should treat models per accuracy. You don’t get rid of low-value customers, you just align your efforts with their value (another useful analytics project!). As for models: low accuracy models are used for improving upon business rules. To further enhance the data, it is possible to code business rules into predictors and incorporate them in a predictive model. Understand this as calibrating the business rules against historical data.

Next, use the models to collect more data:

For the mortgage case, rather than calling up customers based on the lead list, one could send them an email newsletter with a link to a lending calculator. Subsequently, ensure that that you capture customers following the link and use that data (after analytically processing it) as next stage for the lead development process.

Maybe a healthy way to qualify what analytics can achieve is:

A model doesn’t solve your business problem you do. With a model supporting it.

An Australian bank wants to develop an analytics-driven mortgage lead management process.

How is this for a use-case? Not your best elevator pitch, but far more realistic and aligned than the previous use-case. ‘Analytics-driven’ seem to indicate there are multiple analytics components; not the single 99% accuracy model. The words ‘develop’ and ‘lead management’ indicate an involved business partner on a journey now joined by data scientists that can add an empirical perspective. The wording of the use-case opens for a wide range of analytical initiatives:

Analytical derivation of current renter vs. owner

Based on transactional level data one can detect if a customer pays a mortgage or rent. This information will guide the items for a newsletter (“how to use your property as an investment” vs. “10 things to consider for buying your first home”)

Mortgage feasibility estimation

This is the ‘failing’ model discussed in this post. Based on income, expense and savings one can carve out a large group of customers that never need to be bothered with mortgage proposals (read: bother them with more relevant proposals)

Event detection

Looking for specific signals in transactional level data. (e.g. marriage, children, divorce, job change, etc.)

Mortgage purpose prediction

Based on the above segmentation and additional transactional level data, it should be a good practice for a bank to consider ‘Is buying a house good for this customer?’ Purpose meaning: mimic the advice of a financial advisor on this matter and apply it in scale.

Interest estimation based on click-through rate on newsletter items

One click isn’t equal to interest. Analytics can help purify signals. For example: in the “How much can I borrow?” example, relate the filled-out information to the known/derived income or understand how filling out such items are positioned in the time-path of buying a house.

Next Best Action for newsletter topics

Based on feasibility, customer segment, and click-through rates, predict which topics are of interest to customers to make up a personalized newsletter.

Lead list generation for call center

When finally, in the prospect phase, based on identified signals, a predictive model may be strong enough to justify making personal calls and have a mortgage conversion with a customer.

Final thoughts

Clearly, the list of initiatives is far from complete. Consider there’s no such thing as a complete list or an out of the box process. At the end of the day, every bank (or more generic, industry) has their own processes, their own customer dynamics, and their own data. However, the list of initiatives does show that analytics is not a one-shot model approach, but a welcome, empirical based, accompaniment to existing business needs and processes. To develop this, consider giving the business an active role, ensure they get educated enough to look at predictive models from a business evaluation perspective and ensure the development of an (in-house) data science team that can help identifying the right use-cases.

After all, who doesn’t want to work with people that have the sexiest job of the 21st century…

1 Davenport, Tom; Data Scientist: The Sexiest Job of the 21st Century, Harvard Business Review, October 2012

Dr. Olav Laudy is the Chief Data Scientist for IBM Analytics for the Asia-Pacific region and a member of Data To Dollars’ advisory board

Comments (2)

Comments are closed.

I read with great interest, I believe it’s a new era of assets.

Digital assets that companies need to build and maintain, almost like a warehouse for your goods, there should be some staff to take care of and some paperwork to set up the asset. The only difference that it does not have a physical presence in the world, only in digital format.